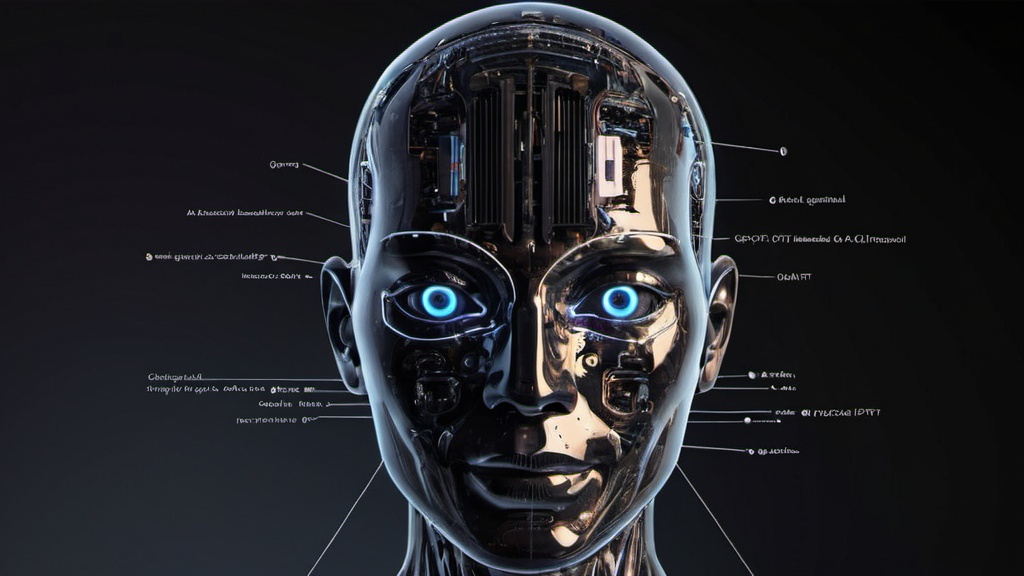

OpenAI Launches GPT-4o: Faster Model Free for All ChatGPT Users

1. Faster and More Capable

OpenAI unveils GPT-4o, a quicker iteration of its renowned GPT-4 model, enhancing text, vision, and audio capabilities. In a livestream announcement, OpenAI CTO Mira Murati highlighted its speed and improvements.

2. Free for All Users

GPT-4o is accessible to all users at no cost. Paid users will enjoy up to five times the capacity limits compared to free users, ensuring seamless and efficient performance.

3. Iterative Rollout

Capabilities of GPT-4o will be gradually introduced, with text and image capabilities launching in ChatGPT starting today. The model promises advancements in generating content and understanding commands across voice, text, and images.

4. Multimodal Functionality

GPT-4o boasts native multimodal capabilities, enabling content generation and command comprehension in voice, text, or images. Developers can access the API for experimentation, offering improved speed and affordability compared to previous versions.

5. Enhanced Voice Mode

ChatGPT's voice mode receives upgrades with GPT-4o, transforming into a Her-like voice assistant. Real-time responses and environmental awareness elevate user experience, providing more comprehensive interaction.

6. Shifting Focus

OpenAI CEO Sam Altman reflects on the company's evolution, emphasizing the shift towards making advanced AI models accessible through paid APIs. The focus is on empowering developers to create innovative solutions for various domains.

7. Clarifying Speculations

Prior to the launch, speculations about AI search engines and new models circulated. However, GPT-4o's release positions OpenAI at the forefront of AI innovation, coinciding with Google I/O and expected AI product launches.